Big-O Notation in Algorithms

Contents

In my previous article, I talked about what complexity analysis is in algorithms and when best and worst scenarios occur. In this article, I will explain how to do Big-O (Big-O) analysis of algorithms.

Big-O notation is one of the most important tools we use to measure the performance and time complexity of an algorithm we designed. In other words, it is the language and metric we use to describe the effectiveness of the algorithm.

Designing an algorithm without knowing what the Big-O concept is can damage the algorithm you have developed.

We need different data structures when designing algorithms. Like Array, LinkedList, Queue, Stack, Binary Tree, Graph. Or we can run different algorithms on the same data structure (eg Array). Bubble Sort, Insertion Sort, Quick Sort, Heap Sort, Merge Sort sorting algorithms are examples of this.

The data structures we choose have different effects on the quality of the algorithm, the resources it consumes, its speed and usage. That’s why software developers need to understand this issue well.

Some Big-O Terms and Scenarios

The most common runtimes are O(logn), O(nlog n), O(n), O(n²). But there is no definitive list of runtimes. Because many variables are taken into account when calculating the working time.

Here are the Big-O times we come across frequently:

- O(1) → Constant

- O(N) → Linear

- O(N²) → Quadratic

- O(logN) → Logarithmic

- O(NlogN) → Linearithmic

- O(2^N)→ Exponential

- O(N!) → Factorial

Where N is the size of the data set or the number of elements, while c is a fixed value.

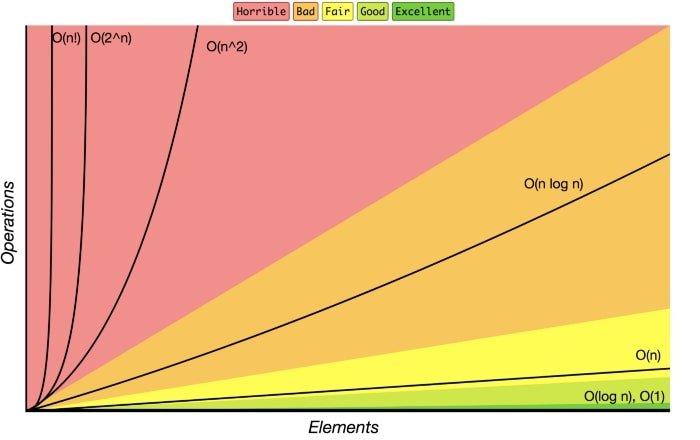

The chart above shows the Big-O classification of the algorithm according to the data set and the operation performed.

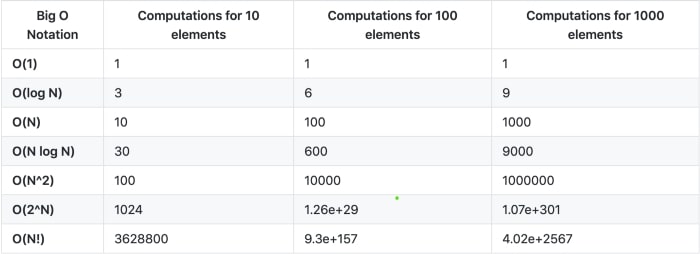

In this table, there are comparisons of the most used Big-O terms according to data inputs of different sizes.

Now let’s better understand some Big-O sizes with examples.

1) Constant Complexity – O(1)

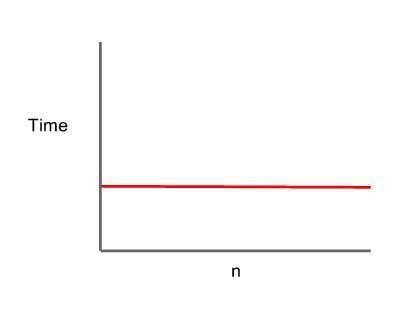

No matter how large the data set entered into the algorithm of constant complexity, the amount of work time and the amount of resources used is constant.

- It runs in O(1) time.

For example,

var arr = [ 1,2,3,4,5];

arr[1]; // => 2Regardless of the size of the array entered as input to the algorithm, the runtime will always remain constant. Because we find the 1st element of the array.

2) Linear Complexity – O(N)

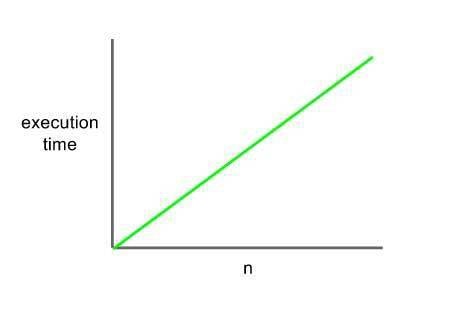

In linear complexity, as the size of the data set we enter into the algorithm increases, the runtime increases linearly. In other words, there is a direct proportionality between the size of the entered data set and the working time.

- It runs in O(N) time.

As an example, let’s consider a stack of CDs that we have. We must spend as much time as the number of CDs to reach the bottom of this CD stack. If the number of CDs increases, the time we spend will increase proportionally.

for(int i=0; i<n; i++){

print("Hello World");

}Here, the program will output n “Hello Worlds”. Therefore, as the number of n increases, the running time of the program will increase linearly.

3) Quadratic Complexity – O(N²)

Simply put, the runtime is proportional to the square of the input size. That is, if the input size is 2, 4 transactions will occur, and if it is 8, 16 transactions will occur.

- It works in O(N²) time.

This complexity is often seen in sorting algorithms.

Two nested for loops are a good example of this.

for(int i=0; i<arr.length; i++){

for(int j=0; j<arr.length; j++){

print("Hello World");

}

}The runtime in a single for loop is O(N). However, when using 2 nested for loops, the runtime is O(N²).

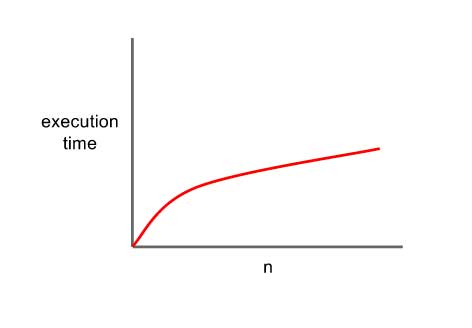

4) Logarithmic Complexity – O(log N)

Logarithmic complexity generally appears in algorithms that divide the problem into two at a time. In other words, the algorithm has logarithmic time complexity if the time it takes to run the algorithm is proportional to the logarithm of the input size N.

- It runs in O(logN) time.

for(let i=n; i>1; i/=2){

console.log(i);

}5) Linearitmik Complexity – O(NlogN)

Linearithmic time complexity is slightly slower than a linear algorithm, but still much better than a quadratic algorithm.

- It runs in O(NlogN) time.

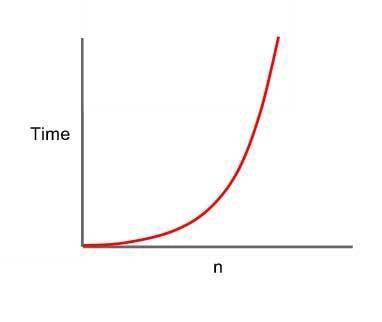

6) Exponential Complexity – O(2^N)

The exponential complexity (base 2) is seen in algorithms as the inverse of logarithm and the number of operations exponentially.

These types of functions, which increase exponentially in terms of processing, consume your system. Such calculations should be avoided as much as possible.

- It runs in O(2^N) time.

An example of exponential complexity is all the subsets of an array.

powerset('') // ...

// n = 0, f(n) = 1;

powerset('a') // , a...

// n = 1, f(n) = 2;

powerset('ab') // , a, b, ab...

// n = 2, f(n) = 4;

powerset('abc') // , a, b, ab, c, ac, bc, abc...

// n = 3, f(n) = 8;

powerset('abcd') // , a, b, ab, c, ac, bc, abc, d, ad, bd, abd, cd, acd, bcd...

// n = 4, f(n) = 16;

powerset('abcde') // , a, b, ab, c, ac, bc, abc, d, ad, bd, abd, cd, acd, bcd...

// n = 5, f(n) = 32;Here, an array with no elements has 1 subset; When an element is added to the array, it has 2 subsets. Then when we add another element to the array, the array will have 4 subsets. In this way, the more elements we add to the array, the more subsets of the array increase by 2^N.

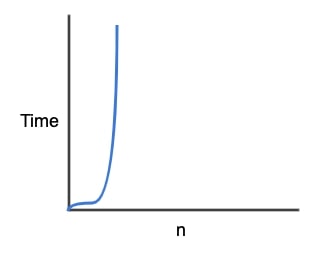

7) Factorial Complexity – O(N!)

The factorial is the product of all positive integers less than itself. Therefore, the complexity of algorithms with factorial complexity grows quite rapidly. So stay away from algorithms with this complexity.

- It runs in O(N!) time.

getPermutations('ab') // ab, ba...

// n = 2, f(n) = 2;

getPermutations('abc') // abc, acb, bac, bca, cab, cba...

// n = 3, f(n) = 6;

getPermutations('abcd') // abcd, abdc, acbd, acdb, adbc, adcb, bacd...

// n = 4, f(n) = 24;

getPermutations('abcde') // abcde, abced, abdce, abdec, abecd, abedc, acbde...

// n = 5, f(n) = 120;Final Thoughts

In this article, I tried to explain what the Big-O notation is in algorithms and the Big-O terms that we encounter most with examples.

Resources:

- https://www.bigocheatsheet.com/

- https://falconcoder.com/2019/11/22/algorithm-analysis-with-bigo-notation/

- https://medium.com/kodcular/nedir-bu-big-o-notation-b8b9f1416d30

- https://medium.com/algorithms-data-structures/algorithm-karma%C5%9F%C4%B1kl%C4%B1%C4%9F%C4%B1-big-o-5f14316890a4

- https://medium.com/@busrauzun/cracking-the-coding-interviewdan-notlar-b%C3%B6l%C3%BCm-4-big-o-b9f9ce7fcf13